AWR report generates the performance statistics related to database server like system and session statistics, segment usage statistics, resource intensive SQLs, time model statistics and buffer cache details.

The important sections in the report are given below. For better understanding a snap from AWR for each section is provided here.

Elapsed time is the difference between begin snap time and end snap time. This time should be equal to the time for which the load test is performed for desired load. If DB time is less than elapsed time, it can be concluded that the bottleneck is not there in the database, else further analysis is required to ascertain the database performance bottleneck.

2. Top 5 timed events

This section can be interpreted as top 5 bottlenecks in database. All other sections in the report will provide breakup of these 5 timed events in to different metrics such as SQL Statistics, IO Statistics, Buffer Pool Statistics and Segment Statistics etc. Most of the database performance bottlenecks should get resolved if these top 5 events are eliminated or reduced.

3. SQL Statistics

This section provides the details of the SQL queries that are executed in the database during the test performed under load. Generally primary concern from Performance point of view for the application is the response times of the transactions at the peak load. This section address the issues related to response times of the database queries. Main points to look under this section are

SQL Ordered by Elapsed Time: Includes SQL statements that took significant execution time during processing.

SQL Ordered by CPU Time: Includes SQL statements that consumed significant database server CPU time during its processing. Examples of Server CPU time are Sorting, Hashing.

SQL Ordered by Gets: SQLs performed a high number of logical reads (from the cache) while retrieving data.

SQL Ordered by Reads: SQLs performed a high number of physical disk reads while retrieving data.

SQL Ordered by Parse Calls: These SQLs experienced a high number of parsing operations.

SQL Ordered by Executions: Lists the number of executions happened of each SQL.

4. Time Model Statistics

This section gives split up of time spent by the application in the processing of SQL queries like PL/SQL processing time, parsing, sequence load, sql execution etc.

The important sections in the report are given below. For better understanding a snap from AWR for each section is provided here.

- Elapsed Time

- Top 5 timed events

- SQL statistics

- Time Model Statistics

Elapsed time is the difference between begin snap time and end snap time. This time should be equal to the time for which the load test is performed for desired load. If DB time is less than elapsed time, it can be concluded that the bottleneck is not there in the database, else further analysis is required to ascertain the database performance bottleneck.

2. Top 5 timed events

This section can be interpreted as top 5 bottlenecks in database. All other sections in the report will provide breakup of these 5 timed events in to different metrics such as SQL Statistics, IO Statistics, Buffer Pool Statistics and Segment Statistics etc. Most of the database performance bottlenecks should get resolved if these top 5 events are eliminated or reduced.

3. SQL Statistics

This section provides the details of the SQL queries that are executed in the database during the test performed under load. Generally primary concern from Performance point of view for the application is the response times of the transactions at the peak load. This section address the issues related to response times of the database queries. Main points to look under this section are

SQL Ordered by Elapsed Time: Includes SQL statements that took significant execution time during processing.

SQL Ordered by CPU Time: Includes SQL statements that consumed significant database server CPU time during its processing. Examples of Server CPU time are Sorting, Hashing.

SQL Ordered by Gets: SQLs performed a high number of logical reads (from the cache) while retrieving data.

SQL Ordered by Reads: SQLs performed a high number of physical disk reads while retrieving data.

SQL Ordered by Parse Calls: These SQLs experienced a high number of parsing operations.

SQL Ordered by Executions: Lists the number of executions happened of each SQL.

4. Time Model Statistics

This section gives split up of time spent by the application in the processing of SQL queries like PL/SQL processing time, parsing, sequence load, sql execution etc.

- DB CPU: total CPU time consumed by database, apart from CPU background processes, in snapshot interval.

- sql execute elapsed time: Time spent by all SQL statements to execute

- DB time: Total time spent in DB, apart from time spent by background processes.

Automatic Database Diagnostic Monitor (ADDM) in Oracle Database 10g:

Overview

The Automatic Database Diagnostic Monitor (ADDM) analyzes data in the Automatic Workload Repository (AWR) to identify potential performance bottlenecks. For each of the identified issues it locates the root cause and provides recommendations for correcting the problem. An ADDM analysis task is performed and its findings and recommendations stored in the database every time an AWR snapshot is taken provided the

STATISTICS_LEVEL parameter is set to TYPICAL or ALL. The ADDM analysis includes the following.- CPU load

- Memory usage

- I/O usage

- Resource intensive SQL

- Resource intensive PL/SQL and Java

- RAC issues

- Application issues

- Database configuration issues

- Concurrency issues

- Object contention

There are several ways to produce reports from the ADDM analysis which will be explained later, but all follow the same format. The findings (problems) are listed in order of potential impact on database performance, along with recommendations to resolve the issue and the symptoms which lead to it's discovery. An example from my test instance is shown below.

FINDING 1: 59% impact (944 seconds)

-----------------------------------

The buffer cache was undersized causing significant additional read I/O.

RECOMMENDATION 1: DB Configuration, 59% benefit (944 seconds)

ACTION: Increase SGA target size by increasing the value of parameter

"sga_target" by 28 M.

SYMPTOMS THAT LED TO THE FINDING:

Wait class "User I/O" was consuming significant database time. (83%

impact [1336 seconds])

The recommendations may include:

- Hardware changes

- Database configuration changes

- Schema changes

- Application changes

- Using other advisors

The analysis of I/O performance is affected by the

DBIO_EXPECTED parameter which should be set to the average time (in microseconds) it takes to read a single database block from disk. Typical values range from 5000 to 20000 microsoconds. The parameter can be set using the following.EXECUTE DBMS_ADVISOR.set_default_task_parameter('ADDM', 'DBIO_EXPECTED', 8000);

Enterprise Manager

The obvious place to start viewing ADDM reports is Enterprise Manager. The "Performance Analysis" section on the "Home" page is a list of the top five findings from the last ADDM analysis task.

Specific reports can be produced by clicking on the "Advisor Central" link, then the "ADDM" link. The resulting page allows you to select a start and end snapshot, create an ADDM task and display the resulting report by clicking on a few links.

addmrpt.sql Script

The

addmrpt.sql script can be used to create an ADDM report from SQL*Plus. The script is called as follows.-- UNIX

@/u01/app/oracle/product/10.1.0/db_1/rdbms/admin/addmrpt.sql

-- Windows

@d:\oracle\product\10.1.0\db_1\rdbms\admin\addmrpt.sql

It then lists all available snapshots and prompts you to enter the start and end snapshot along with the report name.

An example of the ADDM report can be seen here.

DBMS_ADVISOR

The

DBMS_ADVISOR package can be used to create and execute any advisor tasks, including ADDM tasks. The following example shows how it is used to create, execute and display a typical ADDM report.BEGIN

-- Create an ADDM task.

DBMS_ADVISOR.create_task (

advisor_name => 'ADDM',

task_name => '970_1032_AWR_SNAPSHOT',

task_desc => 'Advisor for snapshots 970 to 1032.');

-- Set the start and end snapshots.

DBMS_ADVISOR.set_task_parameter (

task_name => '970_1032_AWR_SNAPSHOT',

parameter => 'START_SNAPSHOT',

value => 970);

DBMS_ADVISOR.set_task_parameter (

task_name => '970_1032_AWR_SNAPSHOT',

parameter => 'END_SNAPSHOT',

value => 1032);

-- Execute the task.

DBMS_ADVISOR.execute_task(task_name => '970_1032_AWR_SNAPSHOT');

END;

/

-- Display the report.

SET LONG 1000000 LONGCHUNKSIZE 1000000

SET LINESIZE 1000 PAGESIZE 0

SET TRIM ON TRIMSPOOL ON

SET ECHO OFF FEEDBACK OFF

SELECT DBMS_ADVISOR.get_task_report('970_1032_AWR_SNAPSHOT') AS report

FROM dual;

SET PAGESIZE 24

The value for the

SET LONG command should be adjusted to allow the whole report to be displayed.

The relevant AWR snapshots can be identified using the

DBA_HIST_SNAPSHOT view.

Related Views

The following views can be used to display the ADDM output without using Enterprise Manager or the

GET_TASK_REPORT function.DBA_ADVISOR_TASKS- Basic information about existing tasks.DBA_ADVISOR_LOG- Status information about existing tasks.DBA_ADVISOR_FINDINGS- Findings identified for an existing task.DBA_ADVISOR_RECOMMENDATIONS- Recommendations for the problems identified by an existing task.

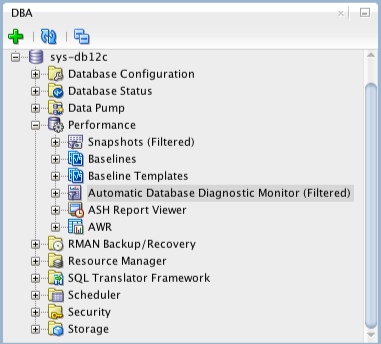

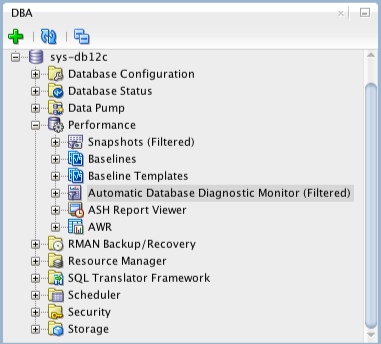

SQL Developer and ADDM Reports

If you are using SQL Developer 4 onward, you can view ADDM reports directly from SQL Developer. If it is not already showing, open the DBA pane "View > DBA", expand the connection of interest, then expand the "Performance" node. The ADDM reports are available from the "Automatic Database Diagnostics Monitor" node.